Prologue

When adding a virtual object into a real scene, the renderer has to shade the object depending on the incident light it receives. Furthermore, the renderer might also take care of changes on reality which were caused by the object, such as a drop-shadow from it. But before we proceed, let’s not reinvent the wheel here: great inspiration can be drawn from the knowledge gathered in the film industry on how to augment reality properly, and by taking a step back and learn from history.

Introduction to the macabre

The earliest form of an augmented reality can be found shortly after the introduction of the Lanterna Magica in the mid 16th century.

Giovanni Fontana, Christian Huygens and/or Athanasius Kircher invented the first apparatus to project images onto a flat surface with a type of lantern that focuses light through a painted glass image. The glass can be moved through a slit on the side of the projection apparatus, much like a more modern slide projector.

It wasn’t long until the device became known as the “lantern of fear” when it was used to conjure up the occult. Apparitions of the dead projected onto smoke via rapidly exchanging images convinced an entire generation that some stage performers had actually attained a special connection to the afterlife. Combined with the bizarre fascination for spiritualism at that time, these shows caused such commotion that eventually the authorities stepped in to end it all.

What remained however was a new kind of artist: a cross-breed between magician and scientist.

Moving images

The French illusionist and filmmaker Georges Méliès accidentally discovered what became known as the stop-trick, whereby a movie shot is halted and the filmed scene is substituted with something different. A great example is his short movie The Hilarious Poster, filmed in 1906. A regular poster on a street takes on a life of its own with horseplay directed at passengers which happen to walk by.

In the modern film industry, early effects such as the light saber glow seen in the first Star Wars movies where inserted via rotoscoping, a technique where the movie is basically edited and enhanced frame-by-frame with a new painted overlay. Initially, the laser sword was a rod with scotchlite attached to it (the material used for traffic sign reflectors), but it turned out that it didn’t reflect enough light for the movie, so the effect was added later.

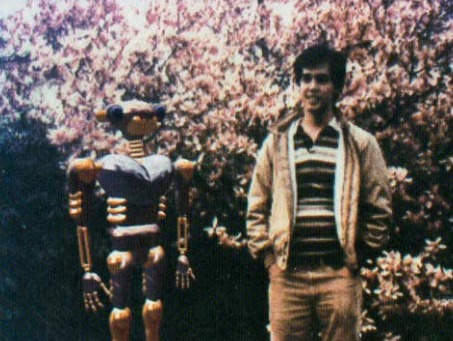

Rotoscoping however is tedious, and surely there are better, more automated ways to add effects to a movie after it has been shot. A curious case is William’s and Chou’s “Interface”, shown at SIGGRAPH 1985 featuring a shiny robot kissed by a woman. The textured-mapped robot however was rendered and added afterwards, while the reflection of this romantic scene on its polished surfaces was filmed using a mirroring ball. Lance Williams published a paper two years earlier which introduced mip-mapping to the world of computer graphics. In an almost Fermat-like manner, the paper contains a paragraph which reads:

If we represent the illumination of a scene as a two-dimensional map, highlights can be effectively antialiased in much the same way as textures. Blinn and Newell [I] demonstrated specular reflection using an illumination map. The map was an image of the environment (a spherical projection of the scene, indexed by the X and Y components of the surface normals) which could be used to cast reflections onto specular surfaces. The impression of mirrored facets and chrome objects which can be achieved with this method is striking; Figure (16) provides an illustration. Reflectance mapping is not, however, accurate for local reflections. To achieve similar results with three dimensional accuracy requires ray-tracing.

Using Jimm Blinn’s modified environment mapping technique with real images instead of computer generated ones did the trick. Course notes from Miller and Hoffman at SIGGRAPH 1984 additionally added the idea of pre-convolving these images to simulate reflection off other glossy or diffuse materials. Eventually, the concept art became a reality when the technique was used in The Flight of the Navigator in 1986, followed by The Abyss in 1989 and Terminator 2 in 1991.

What makes this technique so elegant is that it circumvents the need to track light sources in your scene. One could also extract single point light sources from a hemispherical image, but small reflected highlights from shiny objects can easily distract the algorithm and cause flickering when using low sampling rates. A reflection map instead represents the entire lighting configuration and is therefore physically more plausible than a bunch of point lights.

There is however no inherent necessity to augment reality with objects that have to look physically plausible. First and foremost, it is important that whatever has been added to the scene is at its proper place at all times (i.e. geometrically registered). One grandiose example is Jessica Rabbit’s Why don’t you do it right:

As you can see, Jessica sometimes vanishes behind other objects or people as if she were actually at the correct position in the 3D scene. Of course being a comic character, Jessica’s painters took care of this, but one can use invisible impostors in a renderer to create a virtualized depth buffer of the real scene if none exists.

Note that the clip features another important property, which is scene consistency: Jessica’s reflection on the real catwalk can be seen 14 seconds into the clip. The editors know about the reflective material and can draw the reflection that appears with the viewing angle of the camera. The shadow of her hand is also visible on some dude’s face at exactly minute one. Jessica’s presence in the scene changes reality (and not just by making the audience go nuts)!

Of course, ever since stop-motion was declared extinct by Phil Tippet while producing the animations for Jurassic Park, having computer-generated effects in movies has become the norm. But irrespective of the technique used to augment something into a real scene, capturing reality correctly and having consistent light interaction is key to create the proper illusion of a mixed reality. Real and virtual light sources, direct and indirect, influence the virtual and real space. The importance of this is nowhere more clear and apparent than when watching a bad, low-budget sci-fi movie.

Because your typical SFX director may go overboard with the special effects in a movie scene, it might happen that the only real thing left is the actor himself. Humans are sensitive to facial features, and it is therefore necessary to edit the appearance of skin reflections to match the scene. You don’t want to end up with a green aura from the studio set engulfing the actor. SIGGRAPH 2000 brought a revolution to the film industry with the introduction of the Light Stage. In it, the actors face is captured multiple times under varying illumination. After enough samples have been gathered, for each pixel on the actors face one can effectively invert the rendering equation and extract the BSDF of the skin and reproduce it under different illumination.

This method doesn’t just work with faces, but with materials in general. There is a broad range of reconstruction algorithms for materials, but the basic method still includes a dome which captures samples of a surface under varying illumination conditions. Every time simulated light is supposed to interact with a real surface, its properties have to be known upfront.

Light and Magic

So in essence, the evolution of the film industry from a simple projector used in 17th century theater to the intricate details produced for the The Lord of the Rings movies already provides enough knowledge on the problem of correct augmentation. Over time, artists figured out several methods to capture real light, honor light interaction with real and virtual surfaces and improve overall consistency by reconstructing the world in a more elaborate manner.

In the next articles, I will go into details on how to translate most of these methods to real-time algorithms. We’ll start off creating a basic pipeline for an AR renderer and then proceed to create shaded virtual geometry first before relighting reality.

References

- Fulgence Marion, The Wonders Of Optics

- Jimm Blinn, Texture and reflection in computer generated images

- Wikipedia, Rotoscoping

- Lance Williams, Pyramidal parametrics

- Disney, The Flight of the Navigator (Trailer)

- Paul Debevec, The Story of Reflection Mapping

- Georges Méliès, The Hilarious Poster

- Wikipedia, Georges Méliès

- Robert Zemeckies, Who Framed Roger Rabbit

- USC, The Light Stages at UC Berkeley and USC ICT