Every graphics sub-discipline has its own holy grail, some go-to paper that everyone must have read to get into the necessary basics of the field: Physically Based Rendering has a book by the same name, Path Tracing has Eric Veach’s dissertation, real-time global illumination has Reflective Shadow Maps.

For Augmented Reality rendering, this go-to publication is Paul Debevec’s Rendering Synthetic Objects into Real Scenes.

Fusing two realities

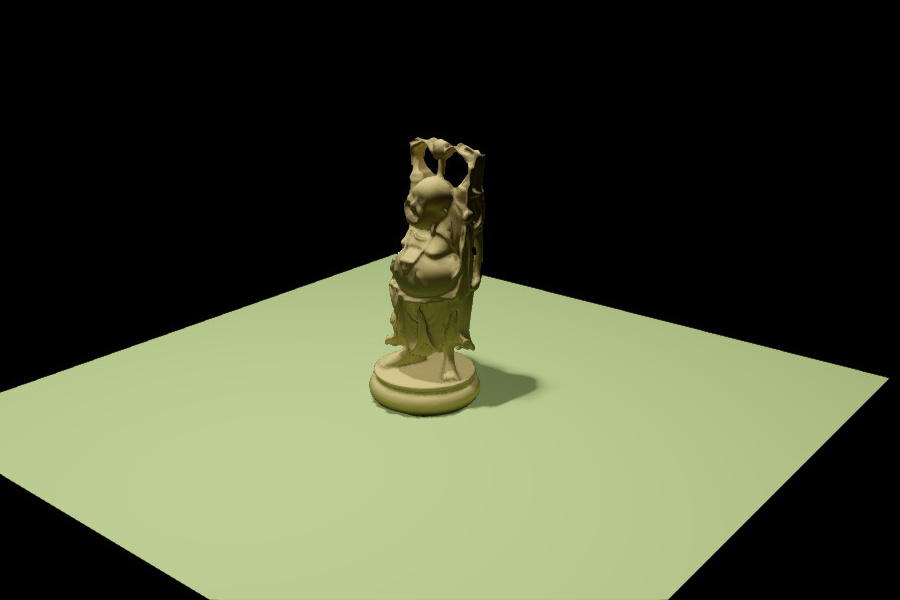

Imagine the following situation: You have a green-ish surface, and you want to project a virtual object on top of it, like this Stanford Buddha.

Just as other real objects on this surface would do, this Stanford Buddha should also drop a shadow onto it. To do this, you figure out the position of a real light source (let’s pretend the room in which this shot was taken has only one light), and put a virtual copy of it into the virtual scene at the same relative location.

What now? To render a proper shadow, we also need a surface to drop the shadow onto. We can use a green-ish virtual surface as a placeholder for the green-ish looking real one to receive the shadow. Let’s give it a try.

But how do we now fuse this image with the real background? Do we just paste it over the image?

That doesn’t look quite right. So far we only had a vague interpretation of what the underlying real surface looks like: Green-ish. The description is not very accurate, and the result shows.

Maybe we could reconstruct the real surface more accurately and then get a more correct looking image. For instance, we could texture the so far green-ish placeholder with an image of the real surface. Of course from that texture we need to carefully remove all the interfering lights first (a process sometimes called delighting) so we’re really left with just the albedo. Assume we did this correctly and now have this texture ready. Just put it on top of the surface and try again.

It looks better, but now we run into other problems:

- The scaling of the virtual plane and its texture is slightly off, which makes it look a bit blurry.

- The tracker didn’t reconstruct the real world position of this patch down to the nanometer, so we end up with some very annoying seams around the border.

- The renderer uses a point light to model the real world light source. You can see the falloff toward the back of the plane, where it is darker than it should be. The real light source in that room is a large neon tube and incident light spreads much more equally across the surface.

Also, it looks like there is some type of coloring issue. Maybe it’s a gamma problem? Is the reconstructed albedo texture really correct? Maybe we need a path-tracer? Is there a red bounce missing from the plane in the back? Maybe this, maybe that, maybe maybe maybe…

Early Augmented Reality applications in the 90s dealt with this fusion-problem in various ways:

- Just leave it as it is.

- If you need shadows, just make the pixels darker. Even if there are multiple non-white lights in the room no one will notice.

- Reconstruct the entire object rather than just a patch of it. At least then there won’t be any visible seams or other texture-related differences.

- Blend the partly reconstructed surface with the real background image.

- Why invest so much hard work rendering correctly when you can make the problem space much easier? Just use simpler real world objects! For instance a diffuse, homogeneous green surface with no texture at all.

Differential Rendering

While all of these tactics can work, they are unsatisfying at best and often require manual tweaking to make just that one scene look good.

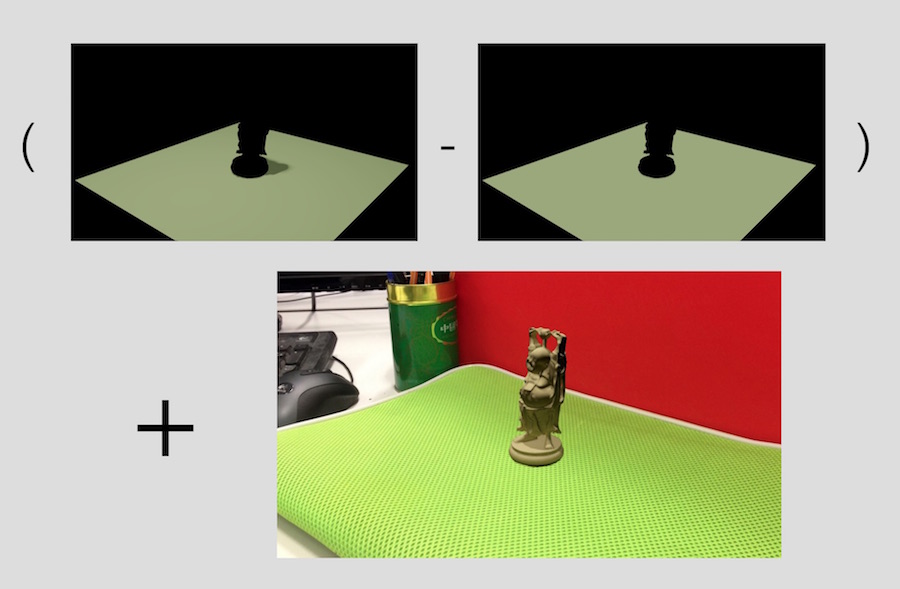

The main weakness in the above scenarios is this: They rely on the placeholder to represent reality as close as possible. Could we perhaps get rid of the placeholder entirely and just be left with the actual change that the Buddha introduces to the real scene (i.e., the shadow)?

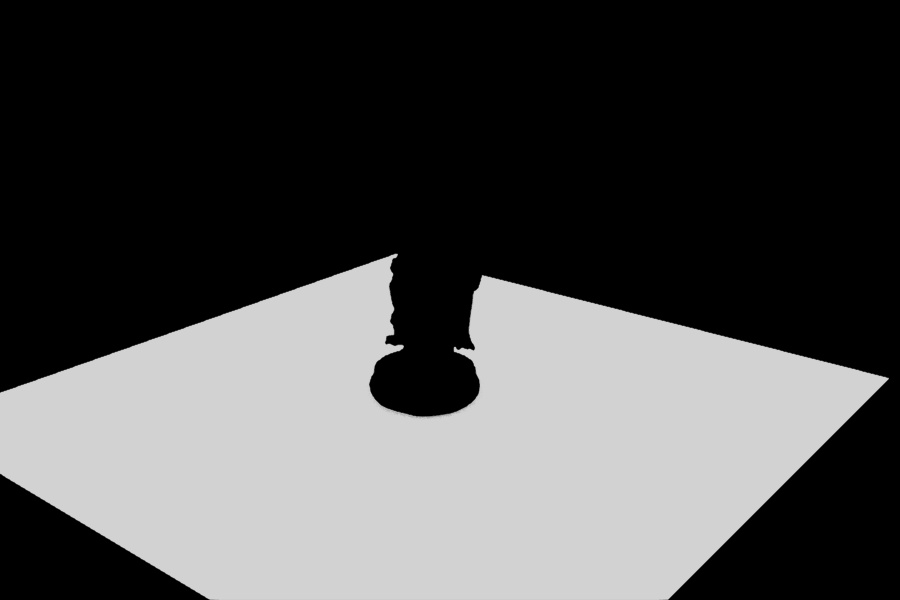

This is the question that Differential Rendering deals with. The idea is simple: Extract the difference introduced by the object and leave out the rest. How? Let’s look at it again. We have a patch of the green floor that is supposed to be the a reconstruction of the real world. If we tag all the pixels of a reconstructed surface in a mask, we can isolate the green placeholder.

We can render the same patch again, but without the object, and repeat the same process of masking out the result. Subtracting both images from one another will leave us with only the difference due to the objects presence in one of the scenes.

The shadowed area will produce negative energy, as there is less energy present on the augmented patch than before, while the rest of the image will just be a zero-sum. If we add this difference back to our very first image, the shadow ends up in the real world and looks like it belongs there.

Conclusion

The main strength of Differential Rendering is its simplicity, as it boils down to a post-processing effect (you can easily repeat the above steps with Gimp, Krita or Photoshop) to extract the influence a virtual object has onto its surrounding, such as blocking real light, bouncing off reflections or transmitting light. This difference is then simply added onto a real background image together with the object. The viewer is left with a sense that the object is actually in the scene rather than being glued on top of it.

As a side note, the extracted difference contains positive contributions (such as bounce light) as well as negative ones (the shadows subtract light from the background). The latter is a quantity called antiradiance and has been discussed in a few publications, most notable Implicit Visibility and Antiradiance for Interactive Global Illumination. I will come back to this some other time.

More importantly, if the renderer is able to compute global illumination, the entire extracted difference should integrate to zero: The object is merely “re-routing” light to a different location, and therefore all energy is conserved.

References

- Paul Debevec, Rendering Synthetic Objects into Real Scenes

- Dachsbacher et al, Implicit Visibility and Antiradiance for Interactive Global Illumination